Kubernetes Autoscaling

Node Autoscaling

We make use of the Kubernetes Cluster Autoscaler in order to automate the process of adding and removing nodes from the cluster. This allows us to ensure that we have enough compute available to support our workloads while not paying for the is when the capacity is no longer needed.

Kubernetes schedules pods on each of the nodes based on (among other things) the pod's requested resources, and when no capacity is available on any of the available nodes then the pods will be stuck in a Pending state and will be unable to schedule. In this event, the autoscaler will attempt to add a new node to the cluster in order to schedule the pod.

Example with EKS

We'll use AWS services for this example, although the same concept applies to GCP and Azure.

Note: This will be a simplified example with a single autoscaling group, however in our clusters we have multiple autoscaling groups distributed accross availibility zones for high availibility.

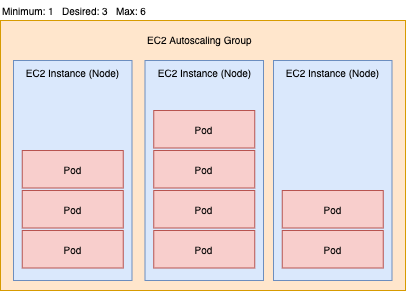

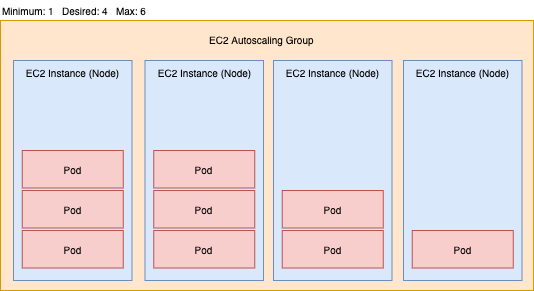

Let's say we have a cluster with this configuration:

In this example we have a single EC2 Autoscaling Group with a minimum of node count of 1, a desired count of 3, and a maxiumum count of 6. We'll also assume that each of the instances has 4 CPUs and 8 GiB of memory available. We'll also assume that the Kubernetes Pods each request 1 CPU and 2 GiB of memory, meaning that the node has capacity for 4 of the pods each.

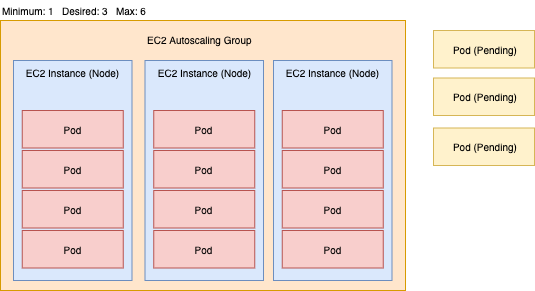

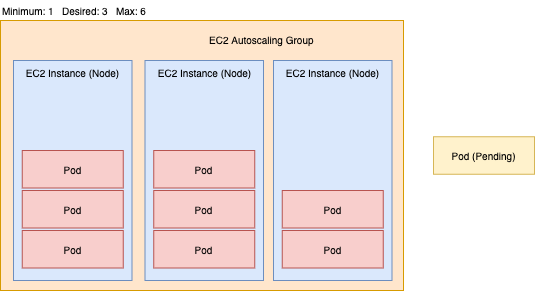

If we want to add 6 additional pods to the cluster, then we would have a situation where 3 pods are successfully able to schedule while 3 are stuck in Pending:

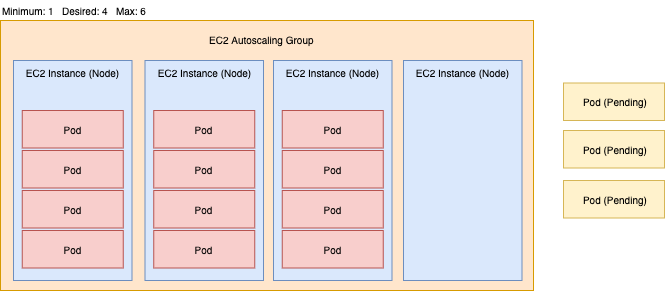

Traditionally, we would need to manually intervene and adjust the desired count of the autoscaling group in order to accomodate these pods on a new node. However, the autoscaler will notice that these pods are not able to schedule and increase the desired count on our behalf. This launches another EC2 instance, which will automatically be joined to our cluster.

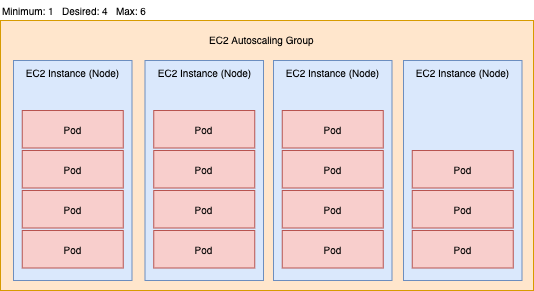

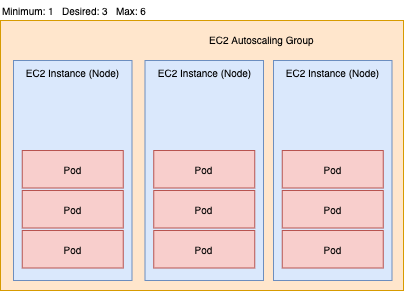

After the node is ready, then the pods will be able to schedule successfully.

Similarly, if we were to remove 6 pods the autoscaler would notice the lack of utilization on the nodes, and attempt to reduce the desired count of the ASG and therefore number of nodes. The pods that currently inhabit the node that is targeted for removal will be gracefully drained, allowing the pods to schedule on other nodes.

Autoscaler Troubleshooting

The Cluster Autoscaler is itself deployed as a pod on Kubernetes, in the cluster-autoscaler namespace. You can view the logs for this pod using kubectl or with Kibana.

In the event that the autoscaler is not behaving correctly and manual intervention is needed, you can adjust the desired count of the autoscaling groups manually through the AWS console.

See this guide for more information on how to do this.